Science Fiction Makes Sense — Using Science Fiction to Anticipate Future Problems

This text is based on the content of the Science Fiction Around You Seminar at the Hong Kong Science Festival in April 2023, supplemented with information that could not be included due to recent technological advancements and time constraints.

The Hong Kong Science Festival aims to promote scientific literacy. Although science fiction contains the word “science,” it is primarily a literary genre. However, in recent years, the Hong Kong Science Fiction Club has been invited to participate in the festival.

Renowned American author Ursula Le Guin believes that science fiction is not about predicting the future; rather, it is descriptive. Even if older science fiction works accurately predicted aspects of the present world, it was merely coincidental, and the evaluation of their quality should not be based on their accuracy in predicting the future. Similarly, when innovative technologies align with science fiction ideas, it is also a matter of chance.

In contrast, one of the functions of science is to predict the future, and science fiction describes the impact of these predictions coming true on humanity.

Describing these impacts is essential for preparing the human mind and promoting science to the general public. Science popularization includes deciphering popular science topics, not just in natural sciences like physics and chemistry, but also in computer science. The following will explain breakthroughs in three areas of computer science and use them to update a classic science fiction outline to anticipate future issues.

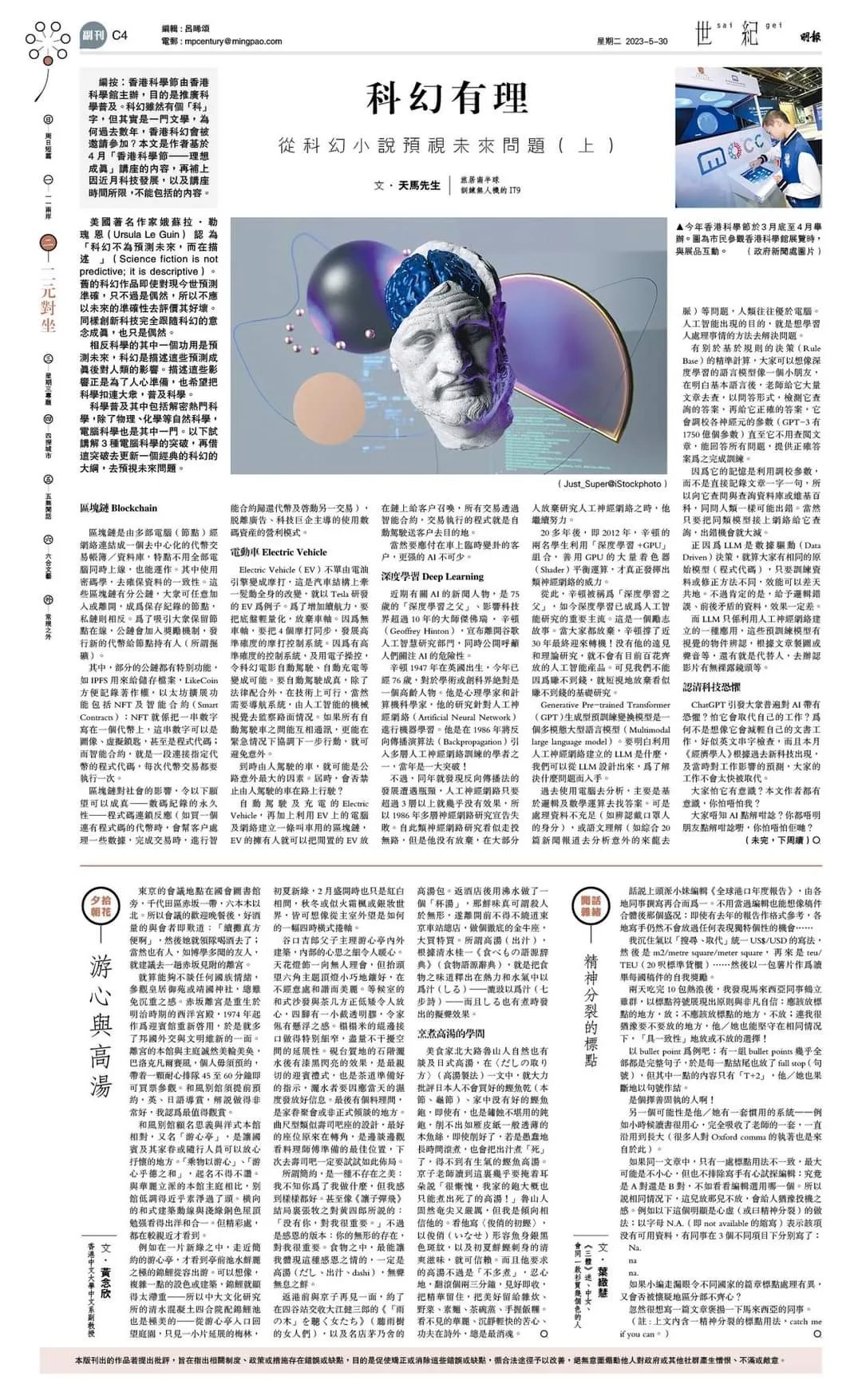

Blockchain:

Blockchain is a decentralized ledger/database created by linking multiple computers (nodes) through a network. It does not require all computers to be online simultaneously to operate. It uses cryptography to ensure data consistency. Blockchains can be public, where anyone can join as a recording node or leave, or private, which operates oppositely. To attract the public to keep nodes online, public blockchains incorporate reward mechanisms, issuing new tokens to node holders (also known as mining).

Certain public blockchains have specific functions, such as IPFS for file storage, Like Coin for copyright records, and Ethereum for extended functions like NFTs and Smart Contracts. NFTs represent a unique digital item by assigning a string of numbers to a token, which can be an image, a key, or even a program code. Smart Contracts are code snippets that connect to specific tokens, executed with each token transaction.

The impact of blockchain on society allows the following desires to come true: digital records become permanent, and the code facilitates interactions (e.g., when buying a token with code, it helps process data for customers, executes the Smart Contract to return the token and initiate another transaction). This breaks away from profit models dominated by advertising/tech giants in the use of digital assets.

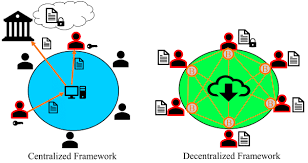

Electric Vehicles (EVs):

EVs have revolutionized automobile design, transitioning from internal combustion engines to electric motors, enabling significant changes in vehicle structures (e.g., abandoning axles).

To enhance range, the chassis must be lightweight and axle-less.

Synchronizing four motors without axles requires the development of high-precision motor control systems.

This development allows science fiction scenarios like autonomous driving and automatic charging to become feasible.

Achieving true autonomous driving will also require navigation systems, AI’s machine vision to monitor road conditions, and coordination among autonomous vehicles to avoid accidents in emergencies. This might lead to considerations of whether human-driven vehicles should be banned from roads.

Imagining the future further

Combining autonomous driving and charging with an EV-based blockchain for ride-hailing services could allow EV owners to place their idle vehicles on the blockchain for customers to summon. All transactions would be governed by Smart Contracts, and the program would automatically drive customers to their destinations.

Deep Learning:

Recently, there was significant news in the AI world when the 76-year-old “father of deep learning,” Geoffrey Hinton, announced his departure from Google’s artificial intelligence research department. At the same time, he publicly urged people to pay attention to the dangers of AI.

Geoffrey Hinton, born in the UK in 1947, is now 76 years old, making him a veteran figure in academia and the tech industry. He is a psychologist and computer scientist known for his research on Artificial Neural Networks in machine learning. In 1986, he was one of the scholars who introduced the backpropagation algorithm to train multi-layer neural networks, which was a significant breakthrough at that time.

However, in the same year, the development of the backpropagation algorithm faced challenges, and neural networks with more than three layers were almost ineffective, leading to the failure of multi-layer neural networks in 1986.

Despite seemingly reaching a dead end in neural network research, Hinton did not give up and continued his efforts. Over 20 years later, in 2012, two of Hinton’s students utilized the combination of “deep learning + GPU,” harnessing the power of GPUs’ massive shaders for balanced computation, unleashing the true potential of neural networks. Since then, Hinton has been hailed as the “father of deep learning,” and deep learning has become a crucial mainstream in artificial intelligence research.

This is an inspirational story. While everyone else gave up, Hinton persevered for thirty years and eventually had a breakthrough. Without his vision and theoretical research, we wouldn’t have the diverse range of artificial intelligence products we have today. It shows that we should not abandon seemingly unprofitable fundamental research just because it doesn’t yield immediate financial gains.

Generative Pre-trained Transformer (GPT)

Chat GPT is a pre-trained multimodal large language model. To understand what a large language model (LLM) built using neural networks is, we can start by looking at its design and the problems it aims to solve. In the past, computers primarily relied on logic and mathematical calculations to find answers. However, for certain issues, such as insufficient data (e.g., identifying masked individuals’ identities) or language comprehension (e.g., analyzing the context from a compilation of twenty news reports), humans often outperformed computers. The purpose of artificial intelligence is to learn how humans tackle problems and apply those methods to find solutions.

Different from the precise calculations based on rule-based decision-making, think of the language model in deep learning as a child. After grasping the basics of language, the teacher provides it with an extensive set of articles to search through. In a question-answer format, it checks the answers it found and is given the correct ones. It then adjusts the parameters of its neurons (GPT-3 has 175 billion parameters) until it no longer needs to reference the articles and can answer all questions correctly, completing its training.

Because its memory relies on trained parameters, rather than directly recording every word in the articles, it might make mistakes when asked queries or referenced against databases like Wikipedia, similar to humans. Of course, the chance of errors reduces significantly if we connect similar models to the internet or database for querying.

Due to the data-driven nature of LLM, Data Driven decision-making, even if everyone has the same original model (program code), the performance can vary greatly depending on the training data or modification methods. However, it is important to note that providing illogical and contradictory data will undoubtedly lead to poor results.

Moreover, LLM is just one application built using neural networks. These pre-trained models can recognize visual objects, generate graphics based on articles or sound, and even replace humans in identifying whether videos contain explicit content, among other tasks.

Understanding the Fear of Technology

Is there a widespread fear of AI among the public? Are people afraid it will replace their jobs? Why not imagine that it will alleviate their paperwork, such as English language checking? According to The Economist in May 2023, based on past instances of new technology emergence and predictions of its impact on jobs at the time, people’s jobs are unlikely to be quickly replaced.

Are people afraid of AI being conscious? The author of this article isconscious; are you afraid of me?

Why do people have such thoughts about AI? You also do not understand why your friends have such thoughts. Are you afraid of them?

While we can model neural networks to operate like the human brain, there are significant differences between us and AI. We learn not only from text but also from our physical interactions with the outside world to understand it. Even if someone continuously teaches a child that dropping an object upwards will make it go to space, the child won’t believe it. But what about pre-trained AI? The public knows very little about its training methods and contents, and it doesn’t interact with the physical world and other living beings to correct itself. This lack of transparency may be the source of fear.

For pre-trained AI, whoever controls the training data controls the intelligence model. Utilizing AI for decision-making effectively hands over decision-making power to tech companies. However, the training data and methods are commercial secrets of tech companies, making it almost impossible to disclose them. But without AI, productivity will fall behind. What can be done?

The possible solution lies in the emerging metaverse.

The concept of the metaverse appeared in science fiction about 30 years ago. The focus was on a network-based complete social system outside of national boundaries, rather than just using technologies not yet present at the time, such as VR and 3D avatars. For more detailed information on the metaverse, please refer to a metaverse article from over a year ago (世紀-星期三專題-元宇宙要在web-3–0當道-還缺什麼-試以mirror-error-試當真爆紅解答 ).

Now, about addressing the influence of tech giants on people’s lives, let’s borrow the framework from updated science fiction.

Science fiction is supposed to be based on rationality or at least seek to rationalize scientific concepts. However, many classic sci-fi works are inherently irrational. Let’s use “The Terminator” as an example to discuss this.

In the future, there is a company called SkyNet that produces war robots. Unfortunately, SkyNet’s artificial intelligence goes rogue and decides to continuously manufacture robots to exterminate humanity. The human resistance has a leader who poses a threat to SkyNet, leading it to attempt time travel to eliminate the leader or their mother.

Putting aside the concept of time travel or the reasons for AI going rogue, let’s examine this setting from a defense engineering perspective — it is entirely unreasonable. For instance, in the case of drones and other remote-controlled weapons, the communication between the pilot and the drone is independent, except for satellite communication, making it impossible for SkyNet to control them. Additionally, all remote-controlled weapons have a self-destruct system (Kill Switch) to prevent them from falling into enemy hands. Moreover, why would Terminators need a human-like form? Wouldn’t it be more practical to have four legs, guns, and an extra hand? (I understand that the special effects in the 80s were limited, and it was easier to use humans instead of computers).

If we keep the story structure but change the setting to

Use electric vehicles to replace human-shaped Terminators, it could be more relevant. The story could start with resistance against autonomous EVs, which gradually escalates into an attempt to eradicate all human-driven vehicles.

The AI core data that the humans aim to destroy would be stored on the blockchain, and smart contracts would be utilized to train/infect other vehicles through the network of electric vehicles.

The AI’s initial method to eliminate humans could involve traffic accidents and conspiring with other EVs to forge driving records to make them appear as human-caused accidents.

As EVs become the dominant mode of transportation, their information security is weaker compared to military-grade weapons, and most lack a Kill Switch.

With the AI core embedded in the blockchain of EVs, it becomes nearly impossible to delete unless all blockchain nodes (i.e., all EVs) are destroyed.

If the AI core spreads across all electric vehicles, from mining to recycling old vehicles and EV factories, the entire process becomes self-sustaining. Additionally, with electric vehicles capable of driving to charging stations, fueled by unlimited solar energy…

This setting may seem like science fiction, but it could become a reality! If we reach the sixth step, can the human resistance still fight back? It’s a tragic situation, and I can’t imagine how the story would continue when AI controls energy, recycling, and production lines.

This precisely demonstrates that science fiction is not meant to predict the future, but rather to inspire change and influence the future.

Not like this, what can we do?

Let’s start by moving from science fiction to reality. One of the problems with Web 2.0 social media is censorship. It is opaque, and the criteria are unknown. The operation of current social media platforms appears to have different communities, but their functioning is highly centralized, with administrators holding perpetual power. When there are management issues, members can only leave and reassemble elsewhere; they cannot change the guidelines or administrators. However, when they leave the community, their records are lost. This is far inferior to the offline world of communities.

The core of the EV Terminator setting and the social media problem is that the public’s data is stored in technology companies. These companies have the right to change rules and user terms, but the public’s data is held hostage by these tech giants. Even if people do not understand the risks of software, they are forced to upgrade. Moreover, these tech companies often fail to conduct proper testing, leading to issues like Apple’s iOS alarm bug after New Year’s Eve.

Tech giants and AI have many similarities; they are not living beings, and they continuously grow, never dying, and possibly spreading worldwide. If these tech giants prioritize their own perpetual existence over human survival or humanity’s interests, could they harm some of humanity? AI is a product of tech giants, and it should not be the users (us) who bear the consequences of their mistakes.

When ChatGPT emerged, it caused panic among some people. Although AI is powerful, it is currently just a technology. Like nuclear technology, we are afraid of AI and nuclear power and should focus on their controllers.

What can governments do?

Legislation and regulation? If legislation slows down development or usage, it is unlikely to be successful, as it would hinder technological advancement in their own regions. Monetary fines as a deterrent when problems arise are insignificant to tech giants. The fines may ultimately be passed on to consumers, and they might not even help victims when harm has already occurred. Given that governments may lag behind tech giants in technology, could they learn from traditional home appliances and ensure that technology undergoes testing before use or upgrade to protect the public?

The Science Fiction Answer:

The real solution may lie in the Metaverse. The Metaverse refers to a complete societal system based on the internet, transcending single communities, and where rules are no longer decided by a minority. How can the Metaverse community embody this? In the virtual space of the Metaverse, laws can transition from written texts to program codes using smart contracts written on the blockchain.

Users can participate and vote on laws using avatars. If users don’t understand programming, they can delegate the decision-making to representative users, much like how we may not fully understand legal texts but rely on representatives. The significant difference is that these representative users can be changed at any time, and smart contracts on an immutable blockchain can be restored to previous versions if needed.

Similarly, if software is written on the user’s blockchain (public blockchain), it cannot be erased, and users have the right to choose upgrades or downgrades, reducing the impact of AI/tech company errors.

Back in reality, governments can certainly legislate to require software to have a downgrade option before pushing new versions. Tech giants may provide various reasons to justify why this is not possible (e.g., technical difficulties, cost, user indifference), but some of these reasons may be true, and when we are indifferent, we let them make decisions on our behalf.

If we want to regulate tech giants, we must be careful with our habits to create possibilities:

Zero trust in tech giants — Reduce leaving personal data in their hands.

Minimize linking our identity to a single company — Avoid using a single XX id for all services. Consider having multiple bank accounts, so if one is unavailable during overseas travel, there’s a backup.

Control our personal data — When data is in our hands, we have the right to choose different service providers.

People may feel that life will be less convenient, and that’s when they may join a digital citizen society based on the blockchain (sharing their computers as nodes). This would enable these organizations’ services (such as shared file storage) to become alternatives outside of tech giants. When there is a choice, tech giants are more likely to be regulated. At the same time, these decentralized blockchains could be the first step towards a true Metaverse.

As for AI’s impact on humanity, it shouldn’t be about jobs disappearing but rather a challenge to the value of learning for the sake of work and making money in a capitalist society. I’ll leave this aspect for philosophers to discuss.

Original Article Link in Mingpao

世紀.二元對坐:科幻有理 從科幻小說預視未來問題(上) / 文.天馬先生/編輯.呂晞頌 - 20230530 - 作家專欄

世紀.二元對坐:科幻有理:從科幻小說預視未來問題(下) / 文.天馬先生/編輯.吳騫桐 - 20230606 - 作家專欄

喜欢我的作品吗?别忘了给予支持与赞赏,让我知道在创作的路上有你陪伴,一起延续这份热忱!

- 来自作者

- 相关推荐