What happens when you type matters.news?

This article was first published on Matters Engineering Wiki.

In a prior article, we took a sneak peek at the architecture of matters.news, tech stack we used to build the website & API, and details on how we designed components and made them work smoothly together.

In this article, we take another angle, starting with a network request. First, we have a brief overview of how servers respond to users' requests, and then, we dive a little deeper into performance optimization.

An Overview of Network Request

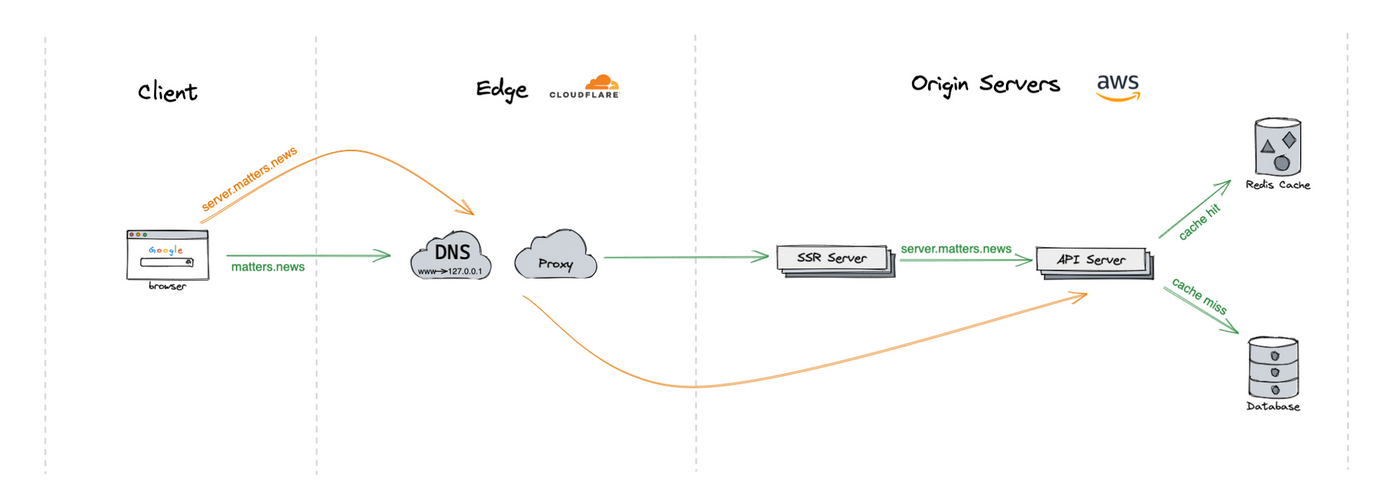

Client

Clients, usually web browsers, communicate to servers with HTTP requests.

When a user enters matters.news into the address bar, the browser first reaches out to the DNS resolver and gets the IP address behind this domain, then sends a request to that IP address, waits for a response, and renders the result in the browser.

Edge

At the network edge, we use Cloudflare to accelerate network requests and protect our origin servers, all DNS lookups and requests are first processed by Cloudflare.

DNS lookup will resolve to the nearest Cloudflare reverse proxy instead of our origin servers. The reverse proxy can filter out malicious requests, and provides performance benefits by caching and smart routing.

Origin Servers

SSR (Server-side rendering) servers send pre-rendered HTML to the client. Internally, lots of API calls are sent to API servers on behalf of the client.

Once an API request (server.matters.news) hits the origin API server, it’s served by the cache server if the same request was previously cached, otherwise, the database.

End-to-end

Let’s wrap them up.

- A user opens a URL starts with matters.news domain;

- The browser makes an HTTP request, which through Cloudflare edge network to our SSR servers;

- SSR servers send HTML and it’s rendered by the browser;

- The user starts browsing other pages or interacting with the website;

- The browser makes subsequent API calls, which through Cloudflare edge network to our API servers;

Network Profiling

Network performance is the core of website performance. To optimize, we first need to understand the network traffic. How many requests are sent? How fast can servers respond?…

We will cover some key metrics that keep monitoring and improving over time.

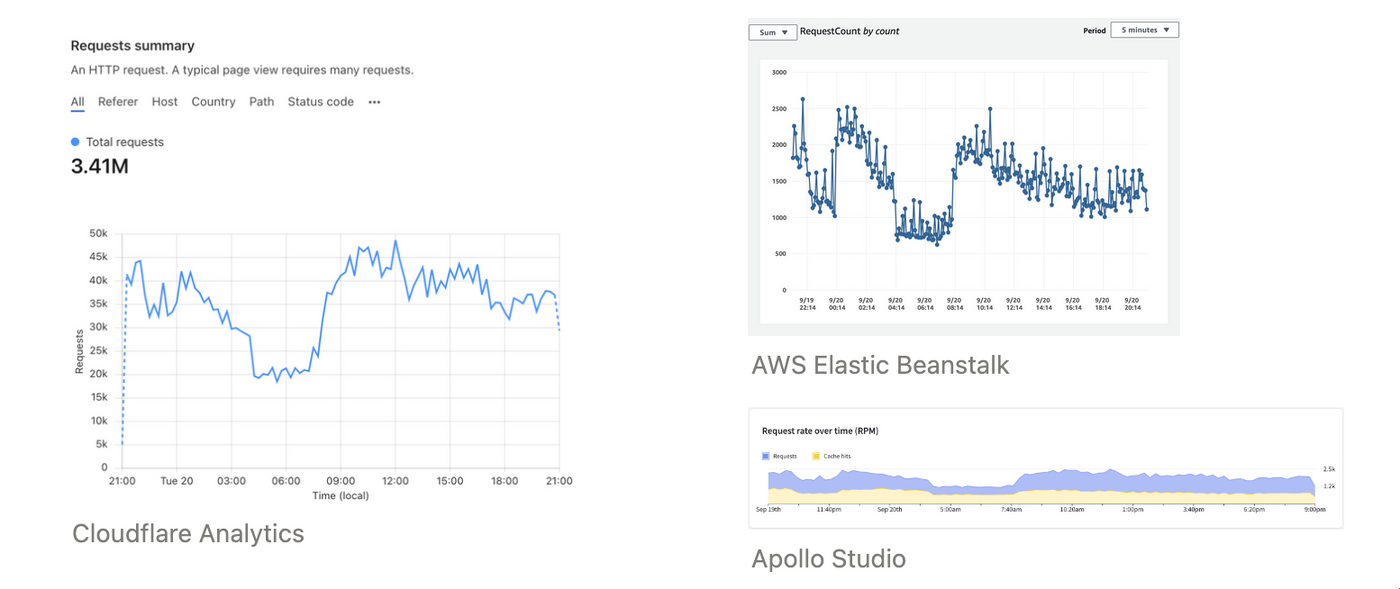

Total Request

The total request over time is the most basic and intuitive metric to understand the network status.

Cloudflare Analytics shows how many requests to the edge, while AWS Elastic Beanstalk and Apollo Studio show how many hit the origin servers.

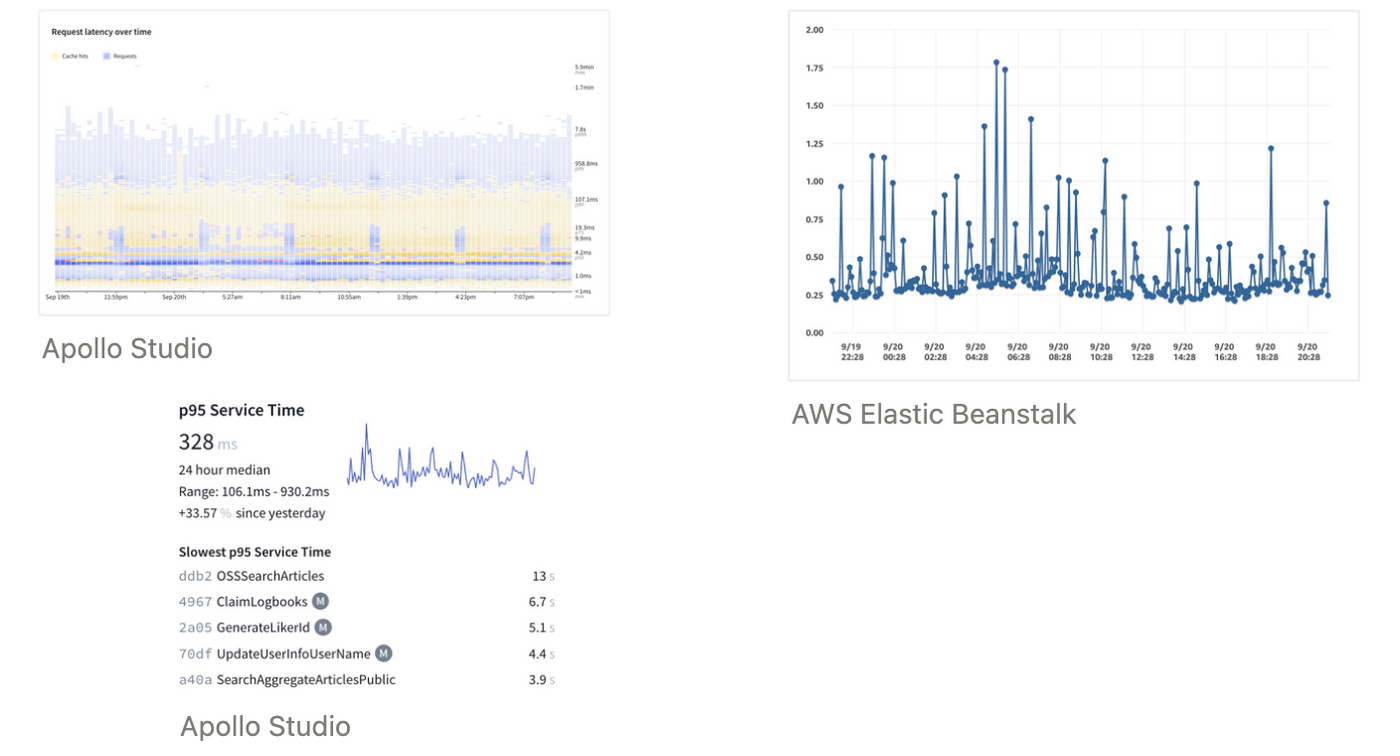

Response Time

Response Time is the amount of time the server takes for an API to process a request and respond back to the client.

We can know the percentiles of response time (e.g. P50, P95) for all requests or for a single request type (GraphQL operation). This is very helpful to identify which GraphQL operation is the “victim” of slow response time.

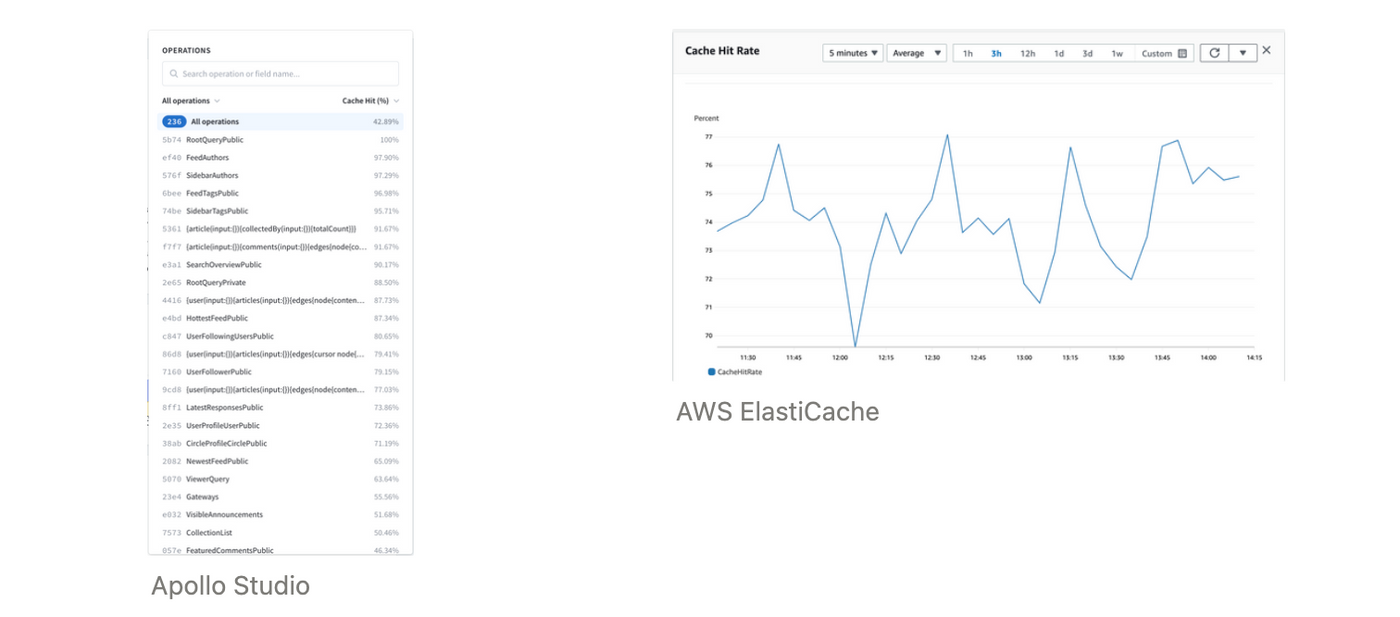

Cache Hit Ratio

Cache Hit Ratio tells us what percentage of requests are served without hitting the origin API servers or database. The higher, the faster response.

Resource Utilization

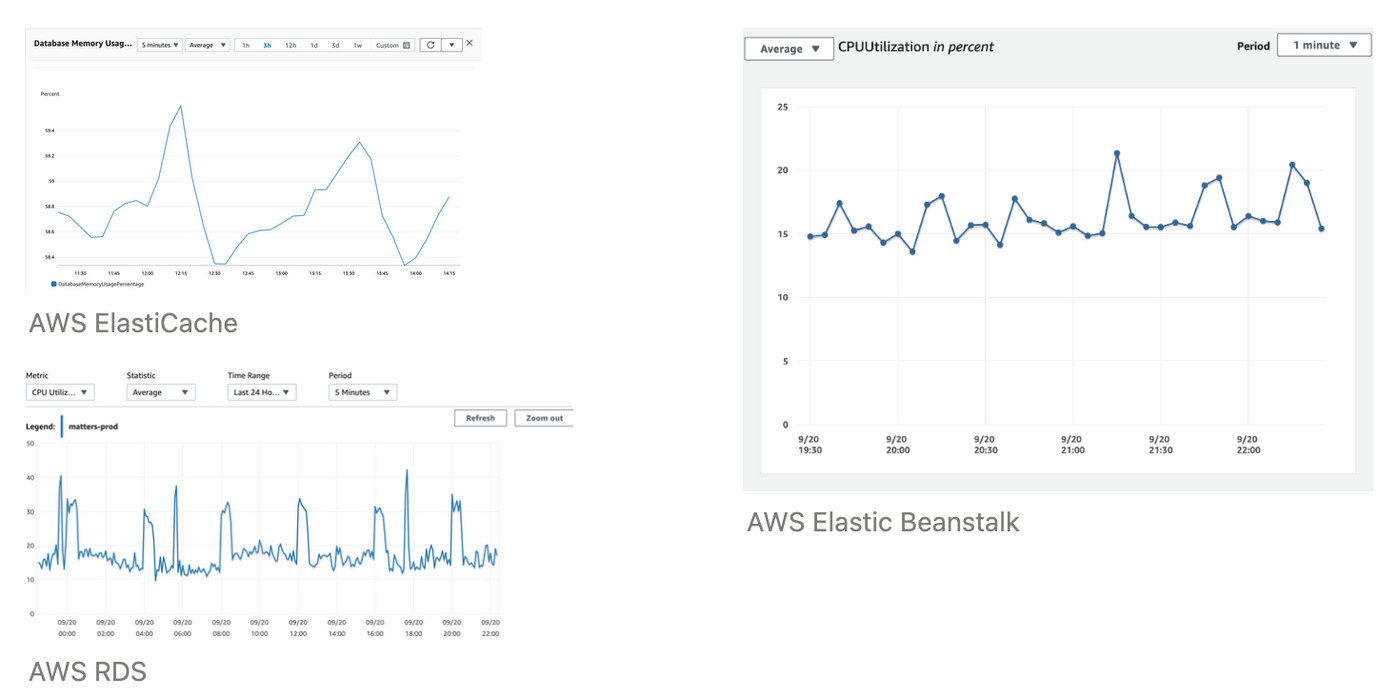

Our origin servers and databases aren’t unlimited auto-scale, so the CPU and memory utilization matter to the performance and reliability.

Performance Optimization

Many tools and services can help us improve the network performance, especially at the application layer, and here are some of the actions we have taken.

Firewall & Smart Routing

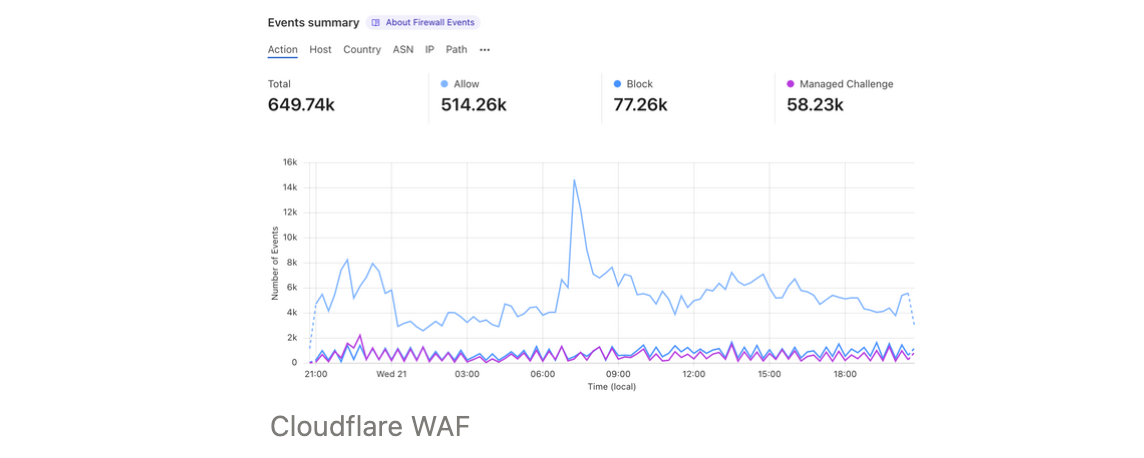

At the network edge, we use Cloudflare WAF to filter out malicious traffic and protect our origin servers. Firewall rules can control incoming traffic by filtering requests based on geolocation, IP address, HTTP headers. And rate limiting to cap the maximum frequency.

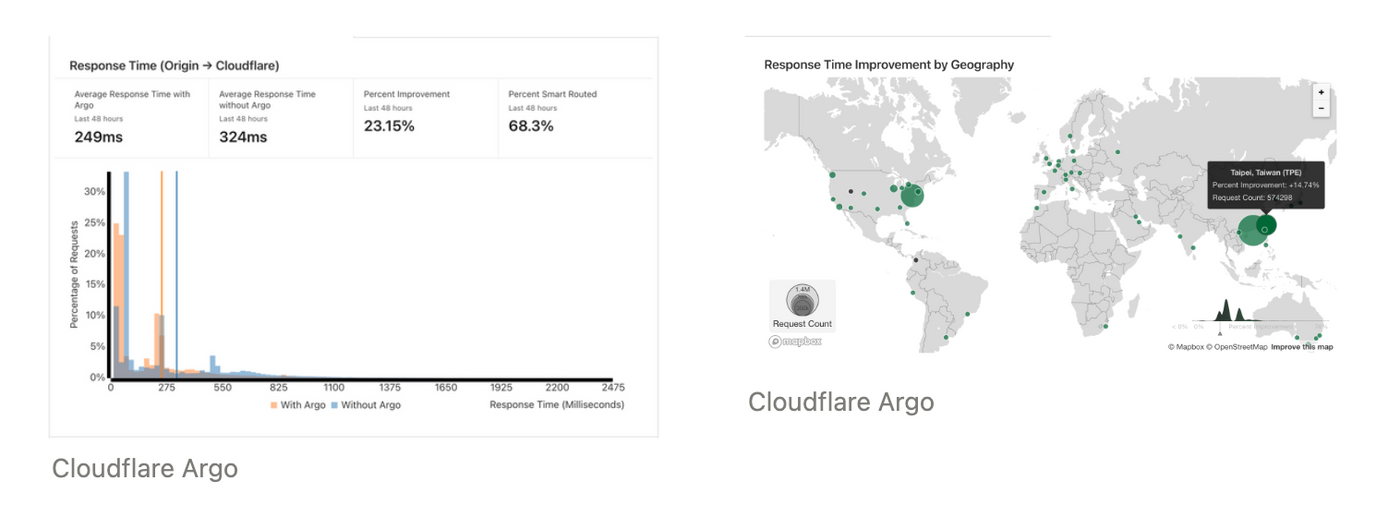

Since API response is dynamic real-time data and hard to cached by Cloudflare CDN, we enabled Cloudflare Argo to route traffic based on network conditions, pick the fastest and most reliable network paths to our origin servers.

CDN

Unlike API, many user-generated contents (UGC) are static, such as images, these contents won’t be changed once created. Our static contents are distributed to AWS CloudFront’s global network, cached and served to user based on geolocation.

In-Memory Caching

API request hits the origin servers, the response data will be cached to the cache (Redis) server, before send back to the client.

We use AWS ElastiCache for Redis as in-memory caching for the best IOPS and throughput performance.

On top of Apollo’s GraphQL plugin, we also built a set of tools to control caching and invalidation. We made an effort on one of the two hard things in computer science.

Public/Private Splitting

API requests (GraphQL operations) are sent by visitors or logged-in users. Requests from visitors are cached publicly, while logged-in users are cached privately. Since private data are accessible by only a single logged-in user, we are caching the API responses by a custom cache key with the user’s ID.

Further, since matters.news is a public platform, most users are landed on public pages, such as the homepage and article page, which are made up of public data and a little private data (e.g. whether I followed the author). We split the API request into two, one to query the public and the other to query the private data.

Now, the cache hit ratio of public queries is increased and the load of origin servers to process private queries is reduced.

Depth Limiting

Depending on the complexity, different request requires different processing time. We limit the complexity of a GraphQL query by its depth, it also secures our API server.

Indexing

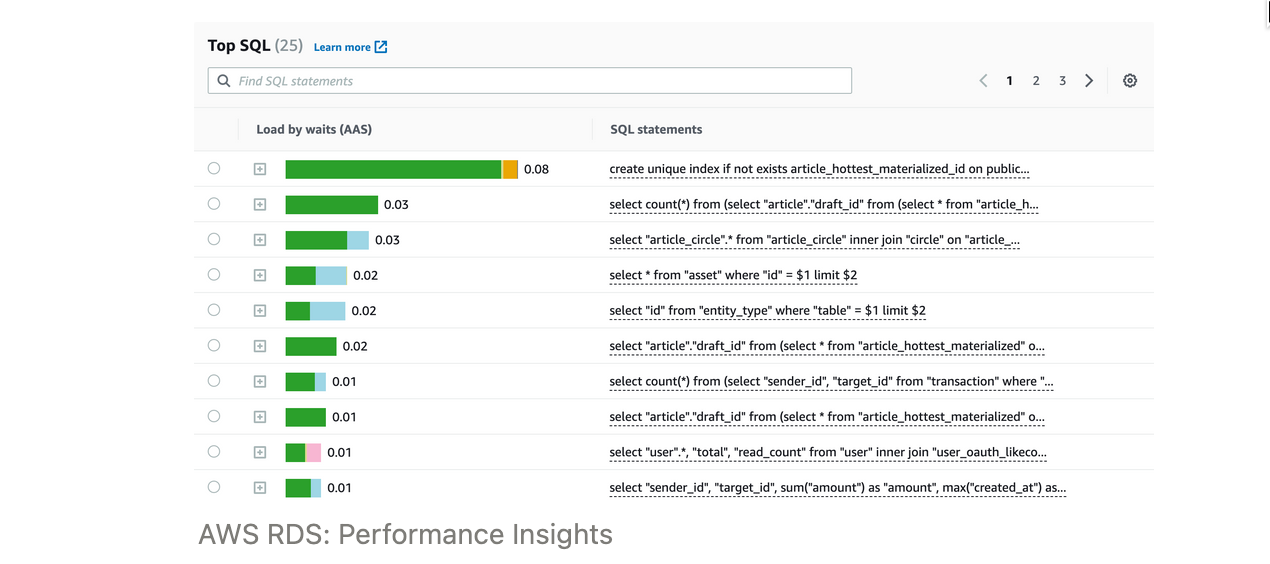

Finally, if a request is cache miss, it requires querying data from the database. We keep monitoring the top load queries and adding indexes for performance and to ease the load.

Summary

There are still many ways to optimize network performance, though we won’t cover them here. And ultimately, it all comes down to goals to achieve: minimize request count and complexity, maximize cache hit ratio, and minimize processing time.

喜欢我的作品吗?别忘了给予支持与赞赏,让我知道在创作的路上有你陪伴,一起延续这份热忱!